The low level in Palantir’s very first quest for buyers got here throughout a pitch assembly in 2004 that CEO Alex Karp and a few colleagues had with Sequoia Capital, which was arguably essentially the most influential Silicon Valley VC agency. Sequoia had been an early investor in PayPal; its best-known companion, Michael Moritz, sat on the corporate’s board and was near PayPal founder Peter Thiel, who had not too long ago launched Palantir. However Sequoia proved no extra receptive to Palantir than any of the opposite VCs that Karp and his group visited; based on Karp, Moritz spent a lot of the assembly absentmindedly doodling in his notepad.

Karp didn’t say something on the time, however later wished that he had. “I ought to have informed him to go fuck himself,” he says, referring to Moritz. But it surely wasn’t simply Moritz who provoked Karp’s ire: the VC neighborhood’s lack of enthusiasm for Palantir made Karp contemptuous {of professional} buyers normally. It grew to become a grudge that he nurtured for years after.

From The Philosopher in the Valley: Alex Karp, Palantir, and the Rise of the Surveillance State by Michael Steinberger. Copyright © 2025. Reprinted by permission of Avid Reader Press, an Imprint of Simon & Schuster Inc.

However the conferences on Sand Hill Highway weren’t solely fruitless. After listening to Karp’s pitch and politely declining to place any cash into Palantir, a companion with one enterprise capital agency had a suggestion: if Palantir was actually intent on working with the federal government, it may attain out to In-Q-Tel, the CIA’s enterprise capital arm. In-Q-Tel had been began a number of years earlier, in 1999 (the title was a playful reference to “Q,” the expertise guru in James Bond movies). CIA Director George Tenet believed that establishing a quasi-public enterprise capital fund by which the company may incubate start-ups would assist be sure that the U.S. intelligence neighborhood retained a technological edge.

The CIA had been created in 1947 for the aim of stopping one other Pearl Harbor, and a half century on, its main mission was nonetheless to forestall assaults on American soil. Two years after In-Q-Tel was based, the nation skilled one other Pearl Harbor, the 9 ⁄ 11 terrorist assaults, a humiliating intelligence failure for the CIA and Tenet. On the time, In-Q-Tel was figuring out of a Virginia workplace complicated recognized, paradoxically, because the Rosslyn Twin Towers, and from the twenty-ninth-floor workplace, workers had an unobstructed view of the burning Pentagon.

In-Q-Tel’s CEO was Gilman Louie, who had labored as a online game designer earlier than being recruited by Tenet (Louie specialised in flight simulators; his had been so sensible that they had been used to assist practice Air Nationwide Guard pilots). Ordinarily, Louie didn’t participate in pitch conferences; he let his deputies do the preliminary screening. However as a result of Thiel was concerned, he made an exception for Palantir and sat in on its first assembly with In-Q-Tel.

What Karp and the opposite Palantirians didn’t know once they visited In-Q-Tel was that the CIA was available in the market for brand new knowledge analytics expertise. On the time, the company was primarily utilizing a program known as Analyst’s Pocket book, which was manufactured by i2, a British firm. In response to Louie, Analyst’s Pocket book had interface however had sure deficiencies when it got here to knowledge processing that restricted its utility.

“We didn’t assume their structure would permit us to construct next-generation capabilities,” Louie says.

Louie discovered Karp’s pitch spectacular. “Alex introduced effectively,” he recollects. “He was very articulate and really passionate.” Because the dialog went on, Karp and his colleagues talked about IGOR, PayPal’s pioneering fraud-detection system, and the way it had mainly saved PayPal’s enterprise, and it grew to become obvious to Louie that they could simply have the technical aptitude to ship what he was searching for.

However he informed them that the interface was very important—the software program would want to prepare and current info in a means that made sense for the analysts utilizing it, and he described among the options they’d anticipate. Louie says that as quickly as he introduced this up, the Palantir crew “bought out of gross sales mode and instantly switched into engineering fixing mode” and started brainstorming in entrance of the In-Q-Tel group.

“That was what I needed to see,” says Louie.

He despatched them away with a homework task: he requested them to design an interface that might probably attraction to intelligence analysts. On returning to Palo Alto, Stephen Cohen, one in every of Palantir’s co-founders, then 22 years outdated, and an ex-PayPal engineer named Nathan Gettings sequestered themselves in a room and constructed a demo that included the weather that Louie had highlighted.

A couple of weeks later, the Palantirians returned to In-Q-Tel to point out Louie and his colleagues what they’d give you. Louie was impressed by its intuitive logic and class. “If Palantir doesn’t work, you guys have a shiny future designing video video games,” he joked.

In-Q-Tel ended up investing $1.25 million in alternate for fairness; with that vote of confidence, Thiel put up one other $2.84 million. (In-Q-Tel didn’t get a board seat in return for its funding; even after Palantir started attracting vital outdoors cash, the corporate by no means gave up a board seat, which was uncommon, and to its nice benefit.)

Karp says essentially the most useful side of In-Q-Tel’s funding was not the cash however the entry that it gave Palantir to the CIA analysts who had been its meant prospects. Louie believed that the one solution to decide whether or not Palantir may actually assist the CIA was to embed Palantir engineers within the company; to construct software program that was really helpful, the Palantirians wanted to see for themselves how the analysts operated. “A machine will not be going to know your workflows,” Louie says. “That’s a human operate, not a machine operate.”

The opposite cause for embedding the engineers was that it will expedite the method of determining whether or not Palantir may, in actual fact, be useful. If the CIA analysts didn’t assume Palantir was able to giving them what they wanted, they had been going to shortly let their superiors know. “We had been at warfare,” says Louie, “and other people didn’t have time to waste.”

Louie had the Palantir group assigned to the CIA’s terrorism finance desk. There they’d be uncovered to giant knowledge units, and in addition to knowledge collected by monetary establishments in addition to the CIA. This could be take a look at of whether or not Karp and his colleagues may ship: monitoring the stream of cash was going to be vital to disrupting future terrorist plots, and it was precisely the form of activity that the software program must carry out in an effort to be of use to the intelligence neighborhood.

However Louie additionally had one other motive: though Karp and Thiel had been centered on working with the federal government, Louie thought that Palantir’s expertise, if it proved viable, may have purposes outdoors the realm of nationwide safety, and if the corporate hoped to draw future buyers, it will finally must develop a robust business enterprise.

Stephen Cohen and engineer Aki Jain labored immediately with the CIA analysts. Each needed to acquire safety clearance, and over time, quite a few different Palantirians would do the identical. Some, nonetheless, refused—they frightened about Large Brother, or they didn’t need the FBI combing by their monetary information, or they loved smoking pot and didn’t wish to give it up. Karp was one of many refuseniks, as was Joshua Goldenberg, the top of design. Goldenberg says there have been occasions when engineers engaged on categorised tasks wanted his assist. However as a result of they couldn’t share sure info with him, they’d resort to hypotheticals.

As Goldenberg recollects, “Somebody may say, ‘Think about there’s a jewel thief and he’s stolen a diamond, and he’s now in a metropolis and we now have folks following him—what would that seem like? What instruments would you want to have the ability to try this?’”

Beginning in 2005, Cohen and Jain traveled on a biweekly foundation from Palo Alto to the CIA’s headquarters in Langley, Virginia. In all, they made the journey roughly 200 occasions. They grew to become so acquainted on the CIA that analysts there nicknamed Cohen “Two Weeks.” The Palantir duo would convey with them the most recent model of the software program, the analysts would check it out and supply suggestions, and Cohen and Jain would return to California, the place they and the remainder of the group would handle no matter issues had been recognized and make different tweaks. In working facet by facet with the analysts, Cohen and Jain had been pioneering a task that might grow to be one in every of Palantir’s signatures.

It turned out that dispatching software program engineers to job websites was a shrewd technique—it was a means of discovering what purchasers actually wanted in the best way of technological assist, of growing new options that might probably be of use to different prospects, and of constructing relationships that may result in extra enterprise inside a corporation. The forward-deployed engineers, as they got here to be known as, proved to be nearly as important to Palantir’s eventual success because the software program itself. But it surely was that unique deployment to the CIA, and the iterative course of that it spawned, that enabled Palantir to efficiently construct Gotham, its first software program platform.

Ari Gesher, an engineer who was employed in 2005, says that from a expertise standpoint, Palantir was pursuing a really bold purpose. Some software program corporations specialised in front-end merchandise—the stuff you see in your display screen. Others centered on the back-end, the processing features. Palantir, says Gesher, “understood that you simply wanted to do deep investments in each to generate outcomes for customers.”

In response to Gesher, Palantir additionally stood aside in that it aimed to be each a product firm in addition to a service firm. Most software program makers had been one or the opposite: they both custom-built software program, or they offered off-the-shelf merchandise that might not be tailor-made to the particular wants of a consumer. Palantir was constructing an off-the-shelf product that is also personalized.

Regardless of his lack of technical coaching—or, maybe, due to it—Karp had additionally give you a novel thought for addressing worries about civil liberties: he requested the engineers to construct privateness controls into the software program. Gotham was finally geared up … with two guardrails—customers had been in a position to entry solely info that they had been licensed to view, and the platform generated an audit path that indicated if somebody tried to acquire materials off-limits to them. Karp favored to name it a “Hegelian” treatment to the problem of balancing public security and civil liberties, a synthesis of seemingly unreconcilable aims. As he informed Charlie Rose throughout an interview in 2009, “It’s the final Silicon Valley answer: you take away the contradiction, and all of us march ahead.”

In the long run, it took Palantir round three years, a lot of setbacks, and a few near-death experiences to develop a marketable software program platform that met these parameters.

“There have been moments the place we had been like, ‘Is that this ever going to see the sunshine of day?’” Gesher says. The work was arduous, and there have been occasions when the cash ran brief. A couple of key folks grew annoyed and talked of quitting.

Palantir additionally struggled to win converts on the CIA. Though In-Q-Tel was backing Palantir, analysts weren’t obliged to modify to the corporate’s software program, and a few who tried it had been underwhelmed.

However in what would grow to be one other sample in Palantir’s rise, one analyst was not simply gained over by the expertise; she was a form of in-house evangelist on Palantir’s behalf. Sarah Adams found Palantir not at Langley, however quite on a go to to Silicon Valley in late 2006. Adams labored on counterterrorism, as effectively, however in a distinct part. She joined a gaggle of CIA analysts at a convention within the Bay Space dedicated to rising applied sciences. Palantir was one of many distributors, and Stephen Cohen demoed its software program. Adams was intrigued by what she noticed, exchanged contact info with Cohen, and upon returning to Langley requested her boss if her unit may do a pilot program with Palantir. He signed off on it, and some months later, Adams and her colleagues had been utilizing Palantir’s software program.

Adams says that the very first thing that jumped out at her was the velocity with which Palantir churned knowledge. “We had been a fast-moving store; we had been form of the purpose of the spear, and we wanted quicker analytics,” she says.

In response to Adams, Palantir’s software program additionally had a “smartness” that Analyst’s Pocket book lacked. It wasn’t simply higher at unearthing connections; even its fundamental search operate was superior. Usually, names could be misspelled in stories, or telephone numbers could be written in several codecs (dashes between numbers, no dashes between numbers). If Adams typed in “David Petraeus,” Palantir’s search engine would convey up all of the accessible references to him, together with ones the place his title had been incorrectly spelled. This ensured that she wasn’t disadvantaged of probably essential info just because one other analyst or a supply within the area didn’t know that it was “Petraeus.”

Past that, Palantir’s software program simply appeared to mirror an understanding of how Adams and different analysts did their jobs—the form of questions they had been in search of to reply, and the way they needed the solutions introduced. She says that Palantir “made my job a thousand occasions simpler. It made an enormous distinction.”

Her advocacy was instrumental in Palantir securing a contract with the CIA. Comparable tales would play out in later deployments—one worker would find yourself championing Palantir, and that individual’s proselytizing would ultimately result in a deal.

However the CIA was the breakthrough: it was proof that Palantir had developed software that really worked, and in addition the belief of the ambition that had introduced the corporate into being. Palantir had been based by Peter Thiel for the aim of helping the U.S. authorities within the warfare on terrorism, and now the CIA had formally enlisted its assist in that battle.

Palantir’s foray into home regulation enforcement was an extension of its counterterrorism work. In 2007, the New York Metropolis Police Division’s intelligence unit started a pilot program utilizing Palantir’s software program. Earlier than 9/11, the intelligence division had primarily centered on crime syndicates and narcotics. However its mandate modified after the terrorist assaults. Town tapped David Cohen, a CIA veteran who had served because the company’s deputy director of operations, to run the unit, and with the town’s blessing, he turned it right into a full-fledged intelligence service using some one thousand officers and analysts. A number of dozen members of the group had been posted abroad, in cities together with Tel Aviv, Amman, Abu Dhabi, Singapore, London, and Paris.

“The rationale for the N.Y.P.D.’s transformation after September eleventh had two distinct aspects,” The New Yorker’s William Finnegan wrote in 2005. “On the one hand, increasing its mission to incorporate terrorism prevention made apparent sense. On the opposite, there was a robust feeling that federal companies had let down New York Metropolis, and that the town ought to not rely on the Feds for its safety.” Finnegan famous that the NYPD was encroaching on areas usually reserved for the FBI and the CIA however that the federal companies had “silently acknowledged New York’s proper to take extraordinary defensive measures.”

Cohen grew to become aware of Palantir whereas he was nonetheless with the CIA, and he determined that the corporate’s software program might be of assist to the intelligence unit. In what was turning into a well-recognized chorus, there was inside resistance. “For the typical cops, it was simply too sophisticated,” says Brian Schimpf, one of many first forward-deployed engineers assigned to the NYPD.

“They’d be like, ‘I simply must lookup license plates, bro; I don’t should be doing these loopy analytical processes.’” IBM’s expertise was the de facto incumbent on the NYPD, which additionally made it onerous to transform folks.

One other stumbling block was worth: Palantir was costly, and whereas the NYPD had an ample finances, not everybody thought it was well worth the funding. However the software program caught on with some analysts, and over time, what started as a counter terrorism deployment moved into different areas, corresponding to gang violence.

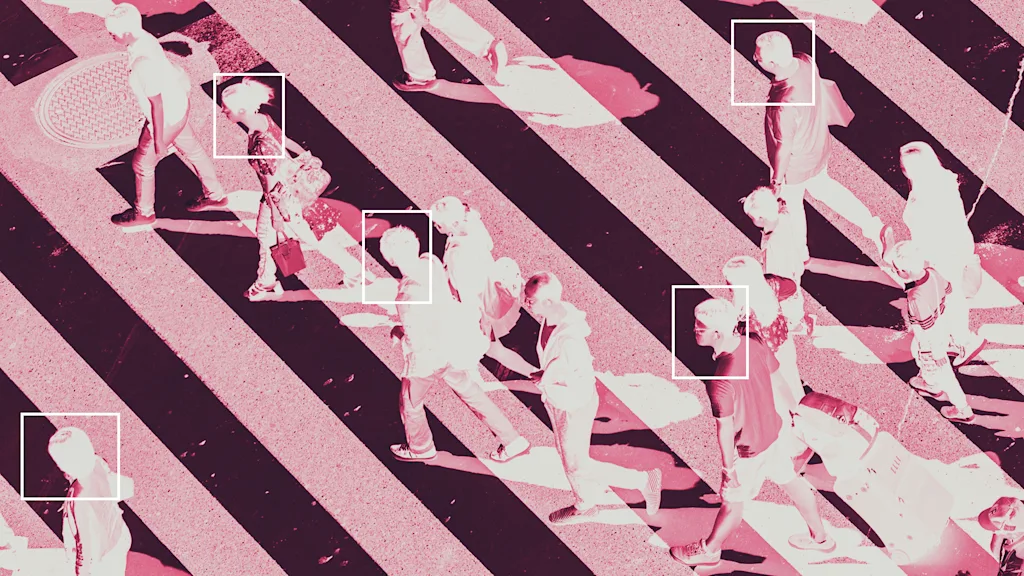

This mission creep was one thing that privateness advocates and civil libertarians anticipated. Their foremost fear, within the aftermath of 9/11, was that harmless folks could be ensnared as the federal government turned to mass surveillance to forestall future assaults, and the NSA scandal proved that these considerations had been warranted.

However one other concern was that instruments and techniques used to prosecute the warfare on terrorism would ultimately be turned on Individuals themselves. The elevated militarization of police departments confirmed that “defending the homeland” had certainly morphed into one thing extra than simply an effort to thwart jihadis. Likewise, police departments additionally started to make use of superior surveillance expertise.

Andrew Guthrie Ferguson, a professor of regulation at George Washington College who has written extensively about policing and expertise, says that capabilities that had been developed to satisfy the terrorism menace had been now “being redirected on the home inhabitants.”

Palantir was a part of this development. Along with its work with the NYPD, it supplied its software program to the Prepare dinner County Sheriff’s Workplace (a relationship that was a part of a broader engagement with the town and that might dissolve in controversy). Nonetheless, it attracted a lot of its police enterprise in its personal yard, California. The Lengthy Seaside and Burbank Police Departments used Palantir, as did sheriff departments in Los Angeles and Sacramento counties. The corporate’s expertise was additionally utilized by a number of Fusion Facilities in California—these had been regional intelligence bureaus established after 9/11 to foster nearer collaboration between federal companies and state and native regulation enforcement. The main focus was on countering terrorism and different prison actions.

However Palantir’s most intensive and longest-lasting regulation enforcement contract was with the Los Angeles Police Division. It was a relationship that started in 2009. The LAPD was searching for software program that might enhance situational consciousness for officers within the area—that might permit them to shortly entry details about, say, a suspect or about earlier prison exercise on a selected avenue. Palantir’s expertise quickly grew to become a basic investigative software for the LAPD.

The division additionally began utilizing Palantir for a crime-prevention initiative known as LASER. The purpose was to establish “scorching spots”—streets and neighborhoods that skilled a whole lot of gun violence and different crimes. The police would then put extra patrols in these locations. As a part of the stepped-up policing, officers would submit details about folks they’d stopped in high-crime districts to a Continual Offenders Bulletin, which flagged people whom the LAPD thought had been more likely to be repeat offenders.

This was predictive policing, a controversial follow during which quantitative evaluation is used to pinpoint areas prone to crime and people who’re more likely to commit or fall sufferer to crimes. To critics, predictive policing is one thing straight out of the Tom Cruise thriller Minority Report, during which psychics establish murderers earlier than they kill, however much more insidious. They consider that data-driven policing reinforces biases which have lengthy plagued America’s prison justice system and inevitably results in racial profiling.

Karp was unmoved by that argument. In his judgment, crime was crime, and if it might be prevented or lowered by the usage of knowledge, that was a internet plus for society. Blacks and Latinos, at least whites, needed to reside in protected communities. And for Karp, the identical logic that guided Palantir’s counterterrorism work utilized to its efforts in regulation enforcement—folks wanted to really feel protected of their properties and on their streets, and in the event that they didn’t, they’d embrace hard-line politicians who would haven’t any qualms about trampling on civil liberties to provide the general public the safety it demanded. Palantir’s software program, not less than as Karp noticed it, was a mechanism for delivering that safety with out sacrificing privateness and different private freedoms.

Nonetheless, neighborhood activists in Los Angeles took a distinct view of Palantir and the form of police work that the corporate was enabling. A corporation known as the Cease LAPD Spying Coalition organized protests and in addition revealed research highlighting what it claimed was algorithmic-driven harassment of predominantly black and Latino neighborhoods and of individuals of coloration. LASER, it stated, amounted to a “racist suggestions loop.” Within the face of criticism, the LAPD grew more and more delicate about its predictive policing efforts and its ties to Palantir.

To Karp, the fracas over Palantir’s police contracts was emblematic of what he noticed because the left’s descent into senseless dogmatism. He stated that many liberals now appeared “to reject quantification of any variety. And I don’t perceive how being anti-quantitative is in any means progressive.”

Karp stated that he was really the true progressive. “In case you are championing an ideology whose logical consequence is that hundreds and hundreds and hundreds of individuals over time that you simply declare to defend are killed, maimed, go to jail—how is what I’m saying not progressive when what you might be saying goes to result in a cycle of poverty?”

He conceded, although, that partnering with native regulation enforcement, not less than in america, was simply too sophisticated. “Police departments are onerous as a result of you could have an overlay of professional moral considerations,” Karp stated. “I might additionally say there’s a politicization of professional moral points to the detriment of the poorest members of our city environments.”

He acknowledged, too, that the payoff from police work wasn’t sufficient to justify the agita that got here with it. And in reality, there hadn’t been a lot of a payoff; certainly, Palantir’s expertise was not being utilized by any U.S. police departments. The New York Metropolis Police Division had terminated its contract with Palantir in 2017 and changed the corporate’s software program with its personal knowledge evaluation software. In 2021, the Los Angeles Police Division had ended its relationship with Palantir, partly in response to rising public stress. So had the town of New Orleans, after an investigation by The Verge prompted an uproar.

However Palantir nonetheless had contracts with police departments in a number of European international locations. And since 2014, Palantir’s software program has been utilized in home operations by U.S. Immigration and Customs Enforcement, work that has expanded underneath the second Trump administration, and earned criticism from numerous former workers.

In 2019, once I was engaged on my story about Palantir for The New York Instances Journal, I attempted to satisfy with LAPD officers to speak in regards to the firm’s software program, however they declined. Six years earlier, nonetheless, a Princeton doctoral candidate named Sarah Brayne, who was researching the usage of new applied sciences by police departments, was given outstanding entry to the LAPD. She discovered that Palantir’s platform was used extensively—a couple of thousand LAPD workers had entry to the software program—and was taking in and merging a variety of knowledge, from telephone numbers to area interview playing cards (filed by police each time they made a cease) to photographs culled from computerized license plate readers, or ALPRs.

Via Palantir, the LAPD may additionally faucet into databases of police departments in different jurisdictions, in addition to these of the California state police. As well as, they may pull up materials that was fully unrelated to prison justice—social media posts, foreclosures notices, utility payments.

Through Palantir, the LAPD may acquire a trove of non-public info. Not solely that: by the community evaluation that the software program carried out, the police may establish an individual of curiosity’s relations, buddies, colleagues, associates, and different relations, placing all of them within the LAPD’s purview. It was a digital dragnet, some extent made clear by one detective who spoke to Brayne.

“Let’s say I’ve one thing occurring with the medical marijuana clinics the place they’re getting robbed,” he stated. “I can put in an alert to Palantir that claims something that has to do with medical marijuana plus theft plus male, black, six foot.” He readily acknowledged that these searches may simply be fishing expeditions and even used a fishing metaphor. “I like throwing the web on the market, you realize?” he stated.

Brayne’s research confirmed the potential for abuse. It was straightforward, as an illustration, to conjure nightmare eventualities involving ALPR knowledge. A detective may uncover {that a} reluctant witness was having an affair and use that info to coerce his testimony. There was additionally the chance of misconduct outdoors the road of responsibility—an unscrupulous analyst may conceivably use Palantir’s software program to maintain tabs on his ex-wife’s comings and goings. Past that, hundreds of thousands of harmless folks had been unknowingly being pulled “into the system” just by driving their vehicles.

After I spoke to Brayne, she informed me that what most troubled her in regards to the LAPD’s work with Palantir was the opaqueness.

“Digital surveillance is invisible,” she stated. “How are you supposed to carry an establishment accountable if you don’t know what they’re doing?”

Tailored from The Philosopher in the Valley: Alex Karp, Palantir, and the Rise of the Surveillance State by Michael Steinberger. Copyright © 2025. Reprinted by permission of Avid Reader Press, an Imprint of Simon & Schuster Inc.